MACHINE LEARNING PROJECTS

Description

As a graduate student at Carnegie Mellon, I took two classes related to machine learning, both taught by Carolyn Rose - Applied Machine Learning and Computational Models of Discourse Analysis.

Though my research doesn't directly touch on topics related to machine learning, this work helped me develop a better understanding of how digital systems make judgements from user data.

Tools

Weka

SIDE

Languages

Python

Java

Uncertainty Online

This work was the first step in a larger research project aimed at reducing the uncertainty that Internet users encounter when trying to judge the validity and intent of information they find online. This particular piece of the project was centered around classifying webpages by type. For the purposes of this project, we selected 7 types of webpages available online: news, scholarly, commercial, forums, personal, organization, and blogs.

This project was a collaboration with Jen Mankoff and Haakon Faste.

Data Collection

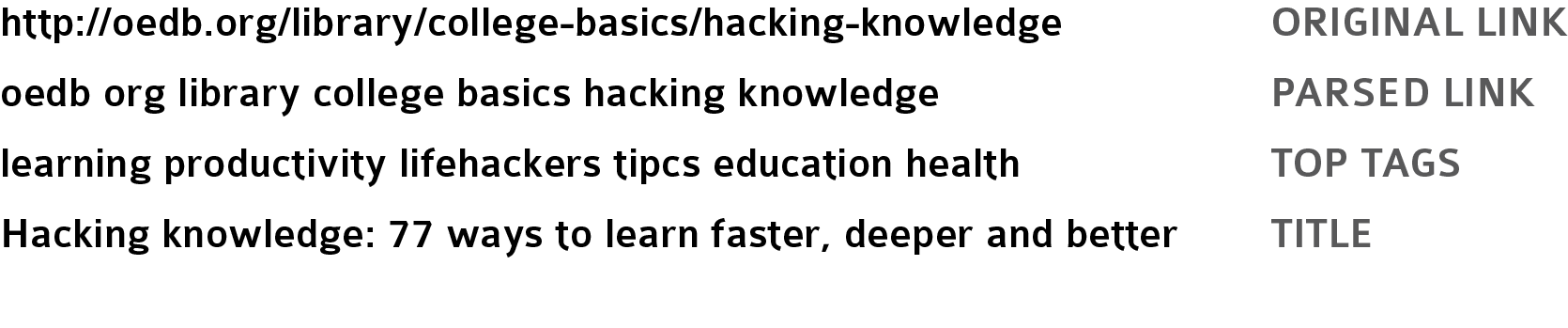

The training and testing data for this project was comprised of approximately 370 links. These links were collected using the Delicious API. I also used the API to grab the tags associated with each link. Each link was then processed using a a number of python scripts. One script broke the url into its component parts, and another scraped the page to capture page sections like the title and body text, and the last pulled features, such as the frequency of scientific language and the ratio of 1st to 2nd person pronouns, from the page's html.

Data Analysis

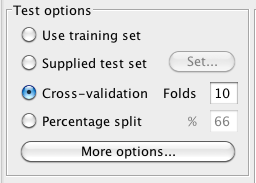

All of the links and data that were collected were pushed into a spreadsheet and analyzed using Weka, a machine learning toolkit.

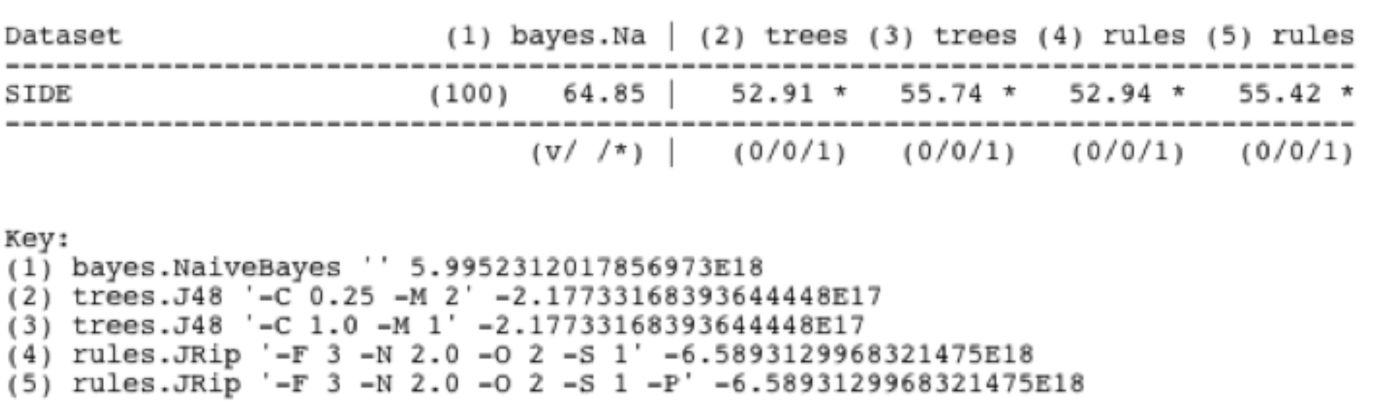

The data was analyzed using different configurations of the following classifiers: Naive Bayes, the j48 decision tree, and jRip, a rule-based classifier.

Results

The algorithm that yielded the best results was Naive Bayes. Because there were 7 categories of sites, the minimum baseline for this dataset was 14 percent of links correctly classified, which is the result that would occur if every link was classified as just one type. (Note that there are other baselines you could use for this type of project.) Naive Bayes performed significantly above this baseline, with about 64 percent of links classified correctly. These results were generated using a small sample size of links, and I believe that additional links would improve future performance.